On October 20th I attended the AgileTour Toronto 2010 conference with a colleague. Well, calling it “conference” is a bit generous but I’m not unhappy about it. It was warm and cozy. I’ll explain more in details in some other posts.

For me, the big highlight of the day was Martin Fowler‘s keynote. Let me rephrase: Fowler gave 3 keynotes in one, which is nice because it’s not too boring and it flows smoothly.

The essence of Agile

In the first keynote Fowler wanted to clarify his view on Agile, talking about its essence. His motivation was to steer clear of a phenomenon that he calls Semantic Diffusion: when you start being successful at innovating and modulating a message, people try to copy you without deeply understanding what you’re doing, just by mimicking your work and distorting your ideas badly. There’s not a lot that we can do about it, with the exception of reminding people about the core principles at the root of what we are doing.

So he wanted to characterize Agile first of all by difference, talking about traditional plan driven methods. In a plan driven method, success is achieved when you do something according to the plan. This is not absolutely impossible per se, but it requires at the very least that the requirements are stable. The bad news is that outside of very limited and insignificant niches, there is NO such thing (and we all know it very well).

So Agile methods are characterized by adaptive planning, which means continuous planning and release early and often. These qualities together constitute an evolutionary design approach, which I’d prefer to define differently and at the software design level in terms of responsive design, but that’s fine, I can take this.

Fowler also briefly mentioned a quote from the Poppendiecks, which sounds more or less like this: being able to deal with a lot of changes in requirements is a competitive advantage. This is a fairly powerful tool in your hands, so use it wisely.

Another fundamental quality of Agile is its focus on people. We all know that after the industrial revolution the way we intend work has been shaped too much and for too long by Taylor and his stopwatch, the so-called system process. This has greatly slowed down the evolution of knowledge work and it has taken a long time and great minds like Deming and Drucker to correct these problems and show a different path. As Deming said, “A bad process would beat a good person every time”. So Agile methods are characterized by putting that good person at the core of the method and allow her/him to shine and do great things, rather than suffocating her/him with bureaucracy.

Because of this, Agile methods need to be adapted to the specificities of the people and their organization, while maintaining the other characteristics mentioned above (while this is fundamental, it is also another contributor to Semantic Diffusion, but again we can’t do much about it). So, Agile methods are characterized by self adaptivity. As Kent Beck says, “In software development, “perfect” is a verb, not an adjective”. This means that the process is never static, but it is continuously verified and improved, always striving for perfection (but of course never achieving it fully).

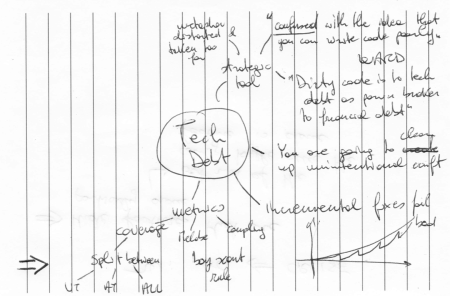

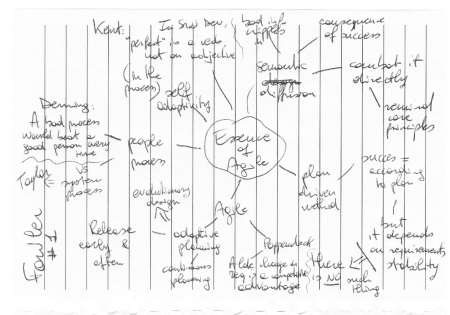

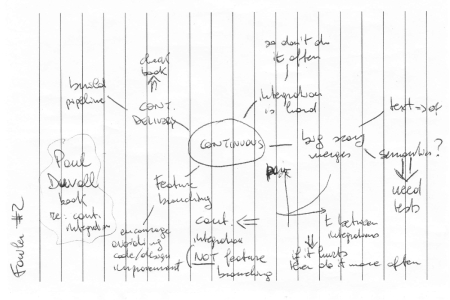

This is the mind map that I have taken live during first Fowler's keynote

Continuous integration & delivery

In the second keynote Fowler focused on continually adding value to a system.

He started by describing an experience so common if you have developed software for a living in the previous millennium: if you have worked in an environment that doesn’t adopt continuous integration then you know that a project can be easily doomed, staying in integration mode for a very long time.

The idea is simple:

- Individual developers work on their own code, that is they develop feature independently

- Towards the end of the project they put everything together and of course nothing works

The metaphor is having 2 teams digging a tunnel into a mountain from 2 opposite sides and failing to meet in the middle. I actually have seen something like this happening in big construction work in Italy. It’s scary.

Even when versioning systems became popular, people tended to do feature branching more than anything else:

- Every developer has his/her own branch, defined for the feature that he/she is developing

- Because integration is difficult and painful integrate less often

- Because integration happens less and less, when it actually happens is more difficult and painful

This brings to what Fowler calls big scary merges, where you merge together a lot of files, even through sophisticated tools that do that automatically for you and from a text perspective the code seems good, but what about the semantics? You need tests to tell you whether the merge was good or not. And you have a lot of red bars for a very long time, because no tool can automate a slight change in the semantics of the protocol between two classes, for example.

The amount of pain that a team gets grows exponentially with the amount of time that incurs between integrations. I mean, more pain = more time & more bugs = more costs & fewer opportunities to generate revenues.

In the Agile world, typically when something hurts you wanna do it more often. What does this mean? It means that when something is problematic, we want to make it simpler, less expensive, so rather than hiding our head in the sand and try to procrastinate the pain as much as possible, we prefer to tackle it right away and make it better before it’s too late.

So, we want to do continuous integration many times a day, which is exactly what happens at my main gig (my beautiful day job) and in many other companies. I’d say that continuous integration has become more or less mainstream these days, which is good. For example, yesterday we have integrated our code something like 30 times. Integrating means compiling, building all the artifacts, running various kind of checks and thousands of tests. All this is entirely automated.

A bad consequence of feature branching, by the way, is that it encourages avoiding code/design improvement (because it’s painful). So the code quietly rots, day after day, to the point where it becomes a big steaming pile of PUT HERE YOUR FAVOURITE EXPRESSION. Been there, done that, never want to do it anymore in my life.

En passant, Fowler recommended Paul Duvall book on continuous integration.

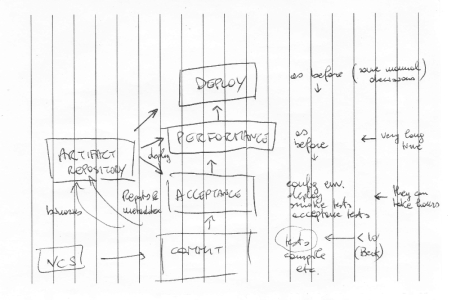

Towards the end of this part Fowler spoke about continuous delivery, which is a big topic. For the non technical people out there the basic idea is to stretch continuous integration into the actual deployment. How can you do that? By building a pipeline of machines where:

- The code is automatically compiled and unit tested => this should take less than 10′

- Artifacts are generated into a repository

- Artifacts are taken and deployed in a different environment where all functional/acceptance tests are executed in a timeframe that can be measured in hours for complicated systems

- The next stage is typically the one where performance tests are executed to ensure that the system is still executing in an acceptable way and again it can take hours, even more than the previous stage

- If everything is fine the code can be automatically deployed in production in a controlled way, where you do smoke tests to see that nothing is horribly broken

Fowler emphasized that while the last step can be typically controlled manually, and it may vary a lot from org to org (eg: you can’t do it automatically if you have to ask for permission to a committee in a bureaucratic organization), the infrastructure should be in place to do it automatically, with a simple click of a button. As Fowler said: “There should be no technical reasons impeding an automated deployment, while it’s perfectly reasonable that there are business reasons to batch many commits in a single deployment controlled manually”.

Of course Fowler recommended Jez Humble book.

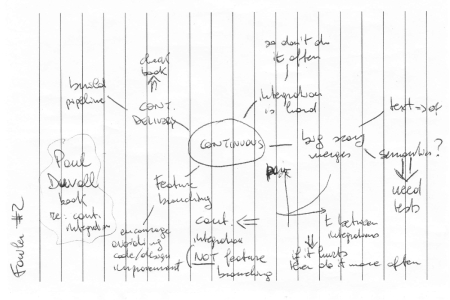

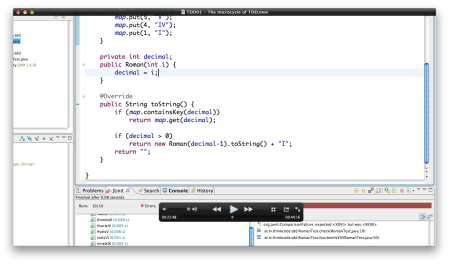

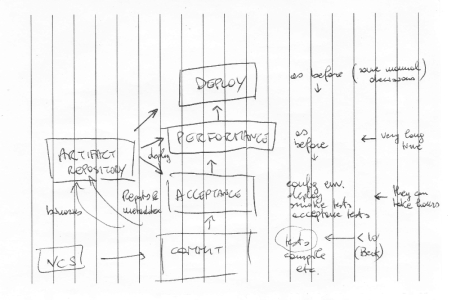

This is the mind map that I have taken live during second Fowler's keynote

A sketch of continuous delivery pipeline depicted by Fowler

Effort, quality and feature development

This third part can be summarized with the following quote: We need to put less effort on quality to put more effort on feature development

At a first glance, this seems sacrilegious, but if you think about it, it doesn’t talk about results, it talks about effort. Hence, it’s actually good to achieve high quality without putting into it much effort, so that we can concentrate on devoting this effort to more labour intensive feature development. Or anyway let’s put effort where our labour is more likely to pay us back directly.

Fowler started by mocking Uncle Bob and his preaching attitude towards quality. It was actually very funny and I think that Fowler is right. Nevertheless, in all fairness Uncle Bob makes the point that all effort devoted to quality pays you back in terms of sustainability and efficiency over time. But certainly he deserved to be mocked, even though with a great deal of sympathy.

Fowler wondered whether quality is tradable. He makes the distinction between internal and external quality:

- Internal => It’s not visible, it’s about software design, cohesion and coupling, code, architecture, etc. It’s like the engine of the car, it’s a kind of magic, hidden under the hood, if it’s not in order all of a sudden the car will break and you’ll be left counting on your feet only

- External => It’s the classic concept of quality in terms of visible behaviour or features of a system, what customers and end users experience out of it

Fowler’s point, and I can’t agree more, is that while external quality is tradable, internal quality is not. Now, we should open a big parenthesis here, related to the differences between startups and sustainable development, the cost of missing opportunities, but for now bear with me. Let’s just say that in the long run, internal quality is overall not tradable. I think that we would all agree on that.

Fowler goes on with his Design Stamina Hypothesis, in order to explain what I just briefly synthesized. The graph from Fowler’s article says it all I suppose.

The idea is that beyond a certain critical mass, the only way to have your system growing linearly with your effort is to keep things in order in the house. Otherwise, development slows gradually.

Then Fowler talks a little bit about the Technical Debt metaphor, that I will explain more in details when talking about another session. Just think that every bit of cruft that cripples into the system is going to sediment and accumulate and you’ll have to pay it back like accrued interest on the principal. It’s a very powerful metaphor that explains well certain phenomenons happening on aging code bases.

Lastly Fowler put up a nice table that tries to classify the different ways in which a team can get in Technical Debt.

|

Reckless |

Prudent |

| Deliberate |

no time for design |

ship now and deal with consequences |

| Inadvertent |

what is layering? |

now we know how we should have done it |

I think that he was being nice, because I would have used Incompetent instead of Inadvertent. Anyway, the only quadrant where eventually can be temporarily acceptable to be is Deliberate/Prudent, but you don’t wanna stay there for very long. Certainly never Reckless.

So, in the end, the conclusion is that to put more effort into feature development you have to put more continuous effort into internal quality, without compromising in the long run, or this will slow you down more and more and more. I don’t remember if Fowler explicitly said that but that was clearly the whole point.

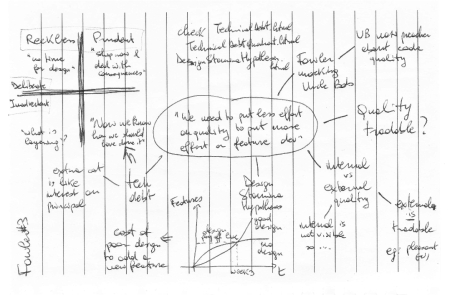

This is the mind map that I have taken live during third Fowler's keynote

To recap, an enjoyable keynote, or series of keynotes if you prefer, delivered by Fowler in a very pleasant way. For me, they keynote alone was well worth attending the conference.